Meet Tyler

A development kit for manifesting AI agents with a complete lack of conventional limitations.

Tyler makes it easy to start building effective AI agents in just a few lines of code. Tyler provides all the essential components needed to build production-ready AI agents that can understand context, manage conversations, and effectively use tools.

Key Features

- Multimodal support: Process and understand images, audio, PDFs, and more out of the box

- Ready-to-use tools: Comprehensive set of built-in tools with easy integration of custom built tools

- MCP compatibility: Seamless integration with Model Context Protocol (MCP) compatible servers and tools

- Real-time streaming: Build interactive applications with streaming responses from both the assistant and tools

- Structured data model: Built-in support for threads, messages, and attachments to maintain conversation context

- Persistent storage: Choose between in-memory, SQLite, or PostgreSQL to store conversation history and files

- Advanced debugging: Integration with W&B Weave for powerful tracing and debugging capabilities

- Flexible model support: Use any LLM provider supported by LiteLLM (100+ providers including OpenAI, Anthropic, etc.)

Get started quickly by installing Tyler via pip: pip install tyler-agent and check out our quickstart guide to build your first agent in minutes.

Overview

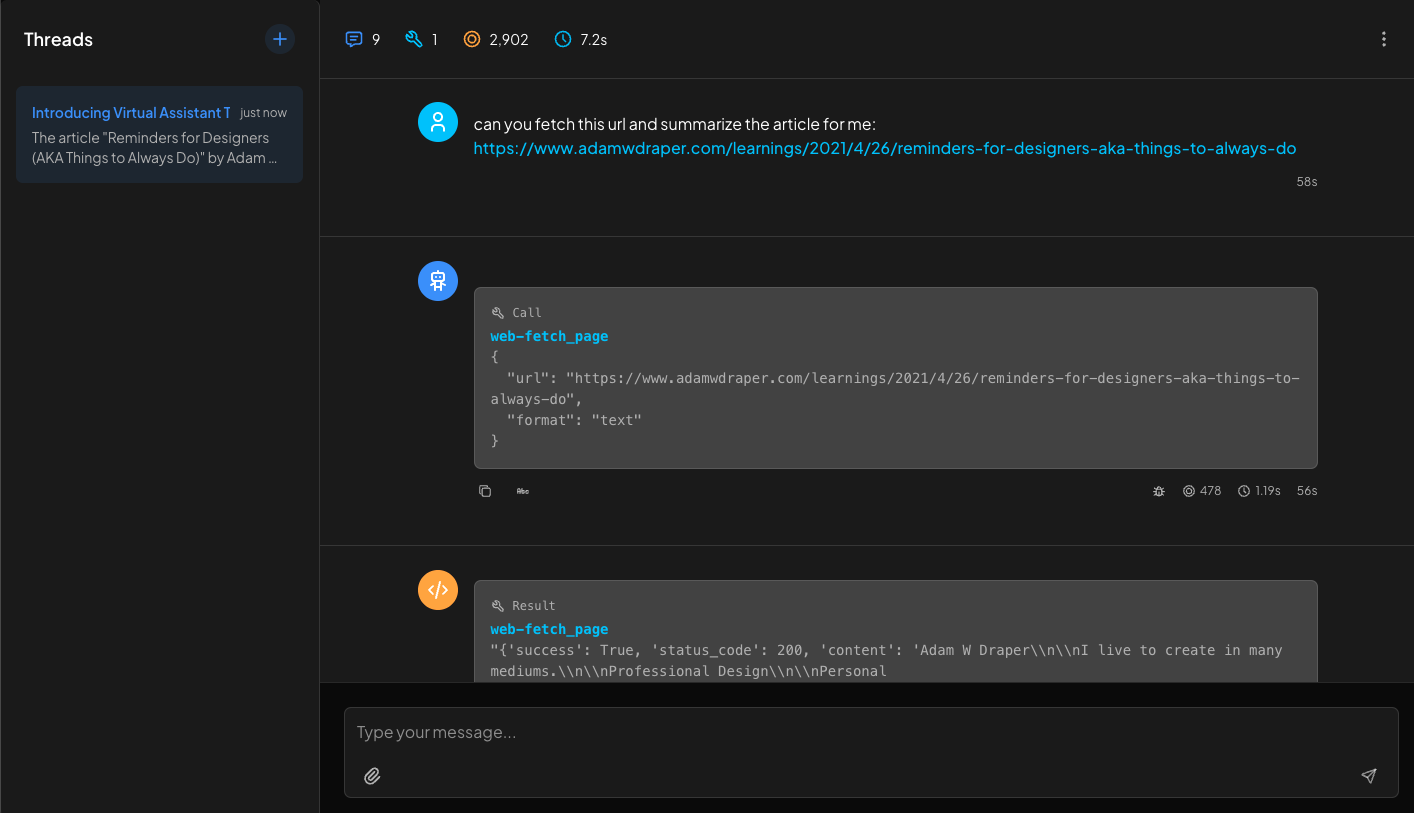

Chat with Tyler

While Tyler can be used as a library, it also has a web-based chat interface that allows you to interact with your agent. The interface is available as a separate repository at tyler-chat.

Key features of Chat with Tyler

- Modern, responsive web interface

- Real-time interaction with Tyler agents

- Support for file attachments

- Message history and context preservation

- Easy deployment and customization

To get started with the chat interface, visit the Chat with Tyler documentation.

Next Steps

- Installation Guide - Detailed installation instructions

- Configuration - Learn about configuration options

- Core Concepts - Understand Tyler's architecture

- API Reference - Explore the API documentation

- Examples - See more usage examples